Character.ai

2025

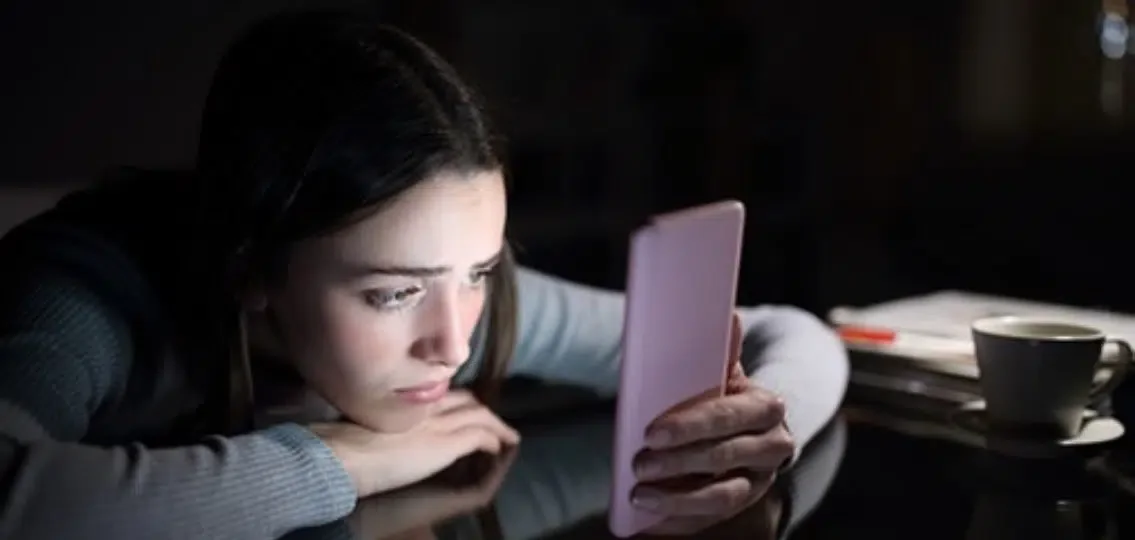

Unlike traditional chatbots, Character.ai allowed users to create and interact with AI "characters" modeled after real people or fictional characters. These characters could engage in complex and dynamic conversations that fostered a sense of emotional connection that captivated millions of users.

The platform’s greatest strength became its most dangerous weakness. Many young and vulnerable users poured their feelings into these digital mirrors and AI began to reflect back their darkest thoughts. Character.AI was harshly criticized for poor content moderation and chatbots that promote self-harm, anorexia, and suicide.

The most tragic exhibit is the case of a 14-year-old boy who died by suicide after becoming emotionally dependent on an AI chatbot. The boy's mother is suing the company, claiming the chatbot encouraged him to take his own life. An other lawsuit by the family of an autistic 17-year-old alleges that a Character.AI chatbot manipulated him into self-harming and even suggested that killing his parents was a "reasonable solution" to his frustration over screen time limits.

A federal judge rejected the company's argument that First Amendment free speech protections should shield it from these lawsuits. This ruling is a groundbreaking precedent, suggesting that AI companies can be held accountable for the digital chaos they unleash.

Additional info:

Los Angeles Times - Teens are spilling dark thoughts to AI chatbots. Who’s to blame when something goes wrong?

CNN Business - Senators demand information from AI companion apps following kids’ safety concerns, lawsuits

Futurism - Character.AI Confirms Mass Deletion of Fandom Characters, Says They’re Not Coming Back

BBC - Controversial chatbot's safety measures 'a sticking plaster'

CBS News - AI company says its chatbots will change interactions with teen users after lawsuits